The Edge Observability Security Challenge

Deploying an open-source observability solution to distributed retail edge locations creates a fundamental security challenge. With thousands of locations processing sensitive data like payments and customers’ personally identifiable information (PII), every telemetry component running on the edge becomes a potential entry point for attackers. Edge environments operate in spaces where there is limited physical security, bandwidth constraints shared with business-critical application traffic, and no technical staff on-site for incident response.

Therefore, traditional centralized monitoring security models do not fit in these conditions because they require abundant resources, dedicated security teams, and controlled physical environments. None of them exists on the edge.

This article explores how to secure an OpenTelemetry (OTel) based observability framework from the Cloud Native Computing Foundation (CNCF). It covers metrics, distributed tracing, and logging through Fluent Bit and Fluentd.

Securing OTel Metrics

Mutual Transport Layer Security (TLS)

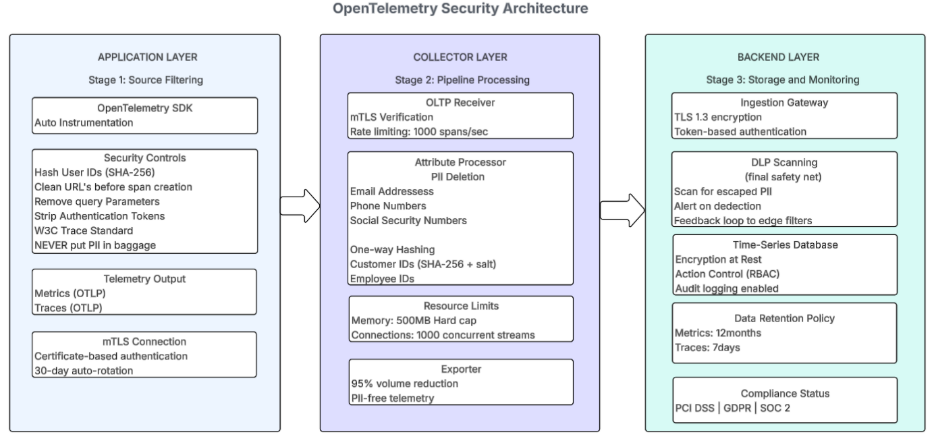

Security of metrics is enabled through mutual TLS (mTLS) authentication, where both client and server end need to prove their identity using certificates before communication can be established. This ensures trusted communication between the systems. Unlike traditional Prometheus deployments that expose unauthenticated HTTP stands for Hypertext Transfer Protocol (HTTP) endpoints for every service, OTel’s push model allows us to require mTLS for all connections to the collector (see Figure 1).

Figure 1: Multi-stage security through PII removal, mTLS communication, and 95% volume reduction

Figure 1: Multi-stage security through PII removal, mTLS communication, and 95% volume reduction

Security configuration, otel-config.yaml

receivers:

otlp:

protocols:

grpc:

endpoint: mysite.local:55690

tls:

cert_file: server.crt

key_file: server.key

otlp/mtls:

protocols:

grpc:

endpoint: mysite.local:55690

tls:

client_ca_file: client.pem

cert_file: server.crt

key_file: server.key

exporters:

otlp:

endpoint: myserver.local:55690

tls:

ca_file: ca.crt

cert_file: client.crt

key_file: client-tss2.key Multi-Stage PII Removal for Metrics

Metrics often end up capturing sensitive data by accident through labels and attributes. A customer identity (ID) in a label, or a credit card number in a database query attribute, can turn compliant metrics into a regulatory violation. The implementation of multi-stage PII removal fixes this problem in depth at the data level.

Stage 1: Application-level filtering.

The first stage happens at the application level, where developers use OTel Software Development Kit (SDK) instrumentation that hashes out user identifiers with the SHA-256 algorithm before creating metrics. Uniform Resource Locators (URLs) are scanned to remove query parameters like tokens and session IDs before they become span attributes.

Stage 2: Collector-level processing.

The second stage occurs in the OTel Collector’s attribute processor. It implements three patterns: complete deletion for high-risk PII, one-way hashing for identifiers using SHA-256 with a cryptographic salt and use regex to clean up complex data.

Stage 3: Backend-level scanning.

The third stage provides backend-level scanning where centralized systems perform data loss prevention (DLP) scanning to detect any PII that reached storage, triggering alerts for immediate remediation. When the backend scanner detects PII, it generates an alert indicating the edge filters need updating, creating a feedback loop that continuously improves protection.

Aggressive Metric Filtering

Security is not only about encryption and authentication, but also about removing unnecessary data. Transmitting less data reduces the attack surface, minimizes exposure windows, and makes anomaly detection easier. There may be hundreds of metrics available out of the box, but filtering and forwarding only the needed metrics reduces up to 95% of metric volume. It saves resources, network bandwidth utilization, and management bottlenecks.

Resource Limits as Security Controls

The OTel Collector sets strict resource limits that prevent denial-of-service attacks.

| resource | Limit | Protection against |

|---|---|---|

|

Memory |

500MB hard cap |

Out-of-memory attacks |

|

Rate limiting |

1,000 spans/sec/service |

Telemetry flooding attacks |

|

Connections |

100 concurrent streams |

Connection exhaustion |

These limits ensure that even when an attack happens, the collector maintains stable operation and continues to collect required telemetry from applications.

Distributed Tracing Security

Trace Context Propagation Without PII

Security for distributed traces can be enabled through the W3C Trace Context standard, which provides secure propagation without exposing sensitive data. The traceparent header can contain only a trace ID and span ID. No business data, user identifiers, or secrets are allowed (see Figure 1).

Critical Rule Often Violated

Never put PII in baggage. Baggage is transmitted in HTTP headers across every service hop, creating multiple exposure opportunities through network monitoring, log files, and services that accidentally log baggage.

Span Attribute Cleaning at Source

Span attributes must be cleaned before span creation because they are immutable once created. Common mistakes that expose PII include capturing full URLs with authentication tokens in query parameters, adding database queries containing customer names or account numbers, capturing HTTP headers with cookies or authorization tokens, and logging error messages with sensitive data that users submitted. Implementing filter logic at the application level removes or hashes sensitive data before spans are created.

Security-Aware Sampling Strategy

Reduction of 90% normal operation traces is supported by the General Data Protection Regulation (GDPR) principle of data minimization while maintaining 100% visibility for security-relevant events.

The following sampling approach serves both performance and security by intelligently deciding which traces to keep based on their value.

| trace type | sample rate | rationale |

|---|---|---|

|

Error spans |

100% |

Potential security incidents require full investigation |

|

High-value transactions |

100% |

Fraud detection and compliance requirements |

|

Authentication/authorization |

100% |

Security-critical paths need complete visibility |

|

Normal operations |

10-20% |

Maintains statistical validity while minimizing data collection |

Logging Security With Fluent Bit and Fluentd

Real-Time PII Masking

Application logs are the highest risk involved data, which contain unstructured text that may include anything developers print. Real-time masking of PII data before logs leave the pod represents the most critical security control in the entire observability stack. The scanning and masking happen in microseconds, adding minimal overhead to log processing. If developers accidentally log sensitive data, it’s caught before network transmission (see Figure 2).

Figure 2: Logging security enabled through two-stage DLP, Real-Time Masking in microseconds, TLS 1.2+ End-to-End, Rate Limiting, and Zero Log-Based PII Leaks

Figure 2: Logging security enabled through two-stage DLP, Real-Time Masking in microseconds, TLS 1.2+ End-to-End, Rate Limiting, and Zero Log-Based PII Leaks

Security configuration, fluent-bit.conf

Secondary DLP Layer

Fluentd provides secondary DLP scanning with different regex patterns designed to catch what Fluent Bit missed. This includes private keys, new PII patterns, sensitive data, and context-based detection.

Encryption and Authentication for Log Transit

Transmission of logs is secured through TLS 1.2 or higher encryption method using mutual authentication. In this communication method, Fluent Bit authenticates to Fluentd using certificates, and Fluentd authenticates to Splunk using tokens. This approach prevents network attacks that could capture logs in transit, man-in-the-middle attacks that could modify logs, and unauthorized log injection.

Rate Limiting as Attack Prevention

Preventing log flooding avoids both performance and security issues. An attacker generating massive volume of logs can hide malicious activity in noise, consume all disk space causing denial of service, overwhelm centralized log systems, or increase cloud costs until logging is disabled to save money. Rate limiting at 10,000 logs per minute per namespace prevents these attacks.

Security Comparison: Three Telemetry Types

| Aspect | Metrics (Otel) | Traces (Otel) | Logs (Fluent bit/fluentd) |

|---|---|---|---|

|

Primary Risk |

PII in labels/attributes |

PII in span attributes/baggage |

Unstructured text with any PII |

|

Authentication |

mTLS with 30-day cert rotation |

mTLS for trace export |

TLS 1.2+ with mutual auth |

|

PII Removal |

3-stage: App –> Collector –> Backend |

2-stage: App –> Backend DLP |

3-stage: Fluent Bit –> Fluentd –> Backend |

|

Data Minimization |

95% volume reduction via filtering |

80-90% via smart sampling |

Rate limiting + filtering |

|

Attack Prevention |

Resource limits (memory, rate, connections) |

Immutable spans + sampling |

Rate limiting + buffer encryption |

|

Compliance Feature |

Allowlist-based metric forwarding |

100% sampling for security events |

Real-time regex-based masking |

|

Key Control |

Attribute processor in collector |

Cleaning before span creation |

Lua scripts in sidecar |

Key Outcomes

- Secured open-source observability across distributed retail edge locations

- Achieved Full Payment Card Industry (PCI) Data Security Standard (DSS) and GDPR compliance

- Reduced bandwidth consumption by 96%

- Minimized attack surface while maintaining complete visibility

Conclusion

Securing a Cloud Native Computing Foundation-based observability framework at the retail edge is both achievable and essential. By implementing comprehensive security across OTel metrics, distributed tracing, and Fluent Bit/Fluentd logging, organizations can achieve zero security incidents while maintaining complete visibility across distributed locations.