Retrieval-augmented generation (RAG) has emerged as a powerful technique for building AI systems that can access and reason over external knowledge bases. RAG enabled us to build accurate and up-to-date systems by combining the content-generative capabilities of LLMs with user-context-specific, precise information retrieval.

However, deploying RAG systems at scale in production reveals a different reality that most blog posts and conference talks gloss over. While the core RAG concept is straightforward, the engineering challenges required to make it work reliably, efficiently, and cost-effectively at production scale are substantial and often underestimated.

This article analyzes the five critical data engineering challenges that we encounter when taking RAG from prototype to production, and makes an honest effort to share practical solutions for addressing them.

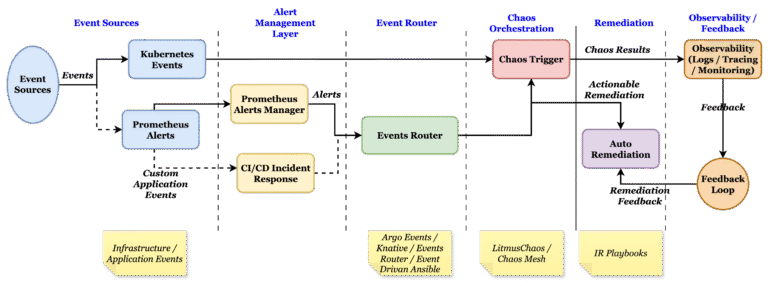

The Ideal RAG Flow

This is what most of the tutorials or blogs show you. But production systems are far messier than what you see here.

Multiple Complex Layer Data Engineering

At the production scale, RAG systems involve multiple complex data engineering layers:

- Data ingestion: Responsible for handling heterogeneous data sources, formats, and update frequencies.

- Data preprocessing: Responsible for cleaning, normalization, deduplication, and quality assurance. In some cases, this is an optional layer or merged with the data ingestion layer itself.

- Chunking strategy: One of the complex layers in the flow, which determines “configurable” optimal document segmentation and metadata/relationship preservation for high accuracy retrieval.

- Embedding generation: At production scale, creating embeddings for millions/billions of documents interacting with LLMs/custom models (e.g., bge-large) is a high-computation-hungry layer.

- Vector storage: Specialized vector database layer for managing embedding indexes with sharding/partitioning, replication, and maintaining consistency between the replicas.

- Retrieval ranking: Most of the time, this layer is a combination of more than one source, which is responsible for implementing multiple relevance signals beyond vector similarity. In many cases, GraphRAG is also combined within this layer.

- Context assembly: Combining and building coherent prompts from multiple sources by preserving an already existing context/conversation.

- Quality monitoring: Layer responsible for retrieval failures, hallucinations, and data drift.

- Feedback loops: Continuously improving retrieval and generation quality based on user feedback/tagging.

The Critical Challenges

Let us deep-dive into the five critical data engineering challenges and the practical solutions to overcome them in real-world scenarios.

1. Data Quality and Source Management

RAG systems are only as good as their underlying data. Still, most teams focus exclusively on the LLM/model(s) component and treat data ingestion as an afterthought.

Real-world data sources typically contain various data formats (e.g., HTML, Markdown, PDF, tables, images, etc.). Most of the documents lack metadata, and some are missing timestamps. There will be duplicates, or they may be expressed slightly differently across multiple formats. Also, sensitive information such as PII, credentials, or confidential information is buried within regular public data. Multilingual/non-English characters pose character-set-related challenges while ingesting and segmentation.

Impact at scale: When you have millions of documents and millions of queries hitting your system daily, even a 1% data quality issue affects thousands of users. Poor data quality leads to:

- Unrelated document retrievals, in turn, waste LLM context tokens

- Compliance violations from exposing sensitive information to LLMs

- Inconsistent behavior that erodes user trust in the overall system itself

Solution:

- Data quality framework: Define the data quality metrics and implement data validation at the ingestion layer itself. Always use schema validation and data profiling tools while ingesting the data. Flag the non-compliance documents for further review. Also, ensure organization-specific AI and data compliance is adhered to.

- Build a data catalog: Track lineage and provenance, maintain a clear source, and document when it was last verified. This helps you trace and isolate the documents that impact relevance.

- Deduplication and versioning: Implementing fuzzy matching to catch near-duplicates and hash matching for exact duplicates helps reduce duplicates. Always maintain a canonical version when conflict exists. Human-in-the-loop will help eliminate unrelated documents.

2. Chunking Strategy and Embedding Semantics

How you split the documents into chunks for embedding generation has massive downstream implications. But unfortunately, there’s no universal best strategy.

Common chunking problems:

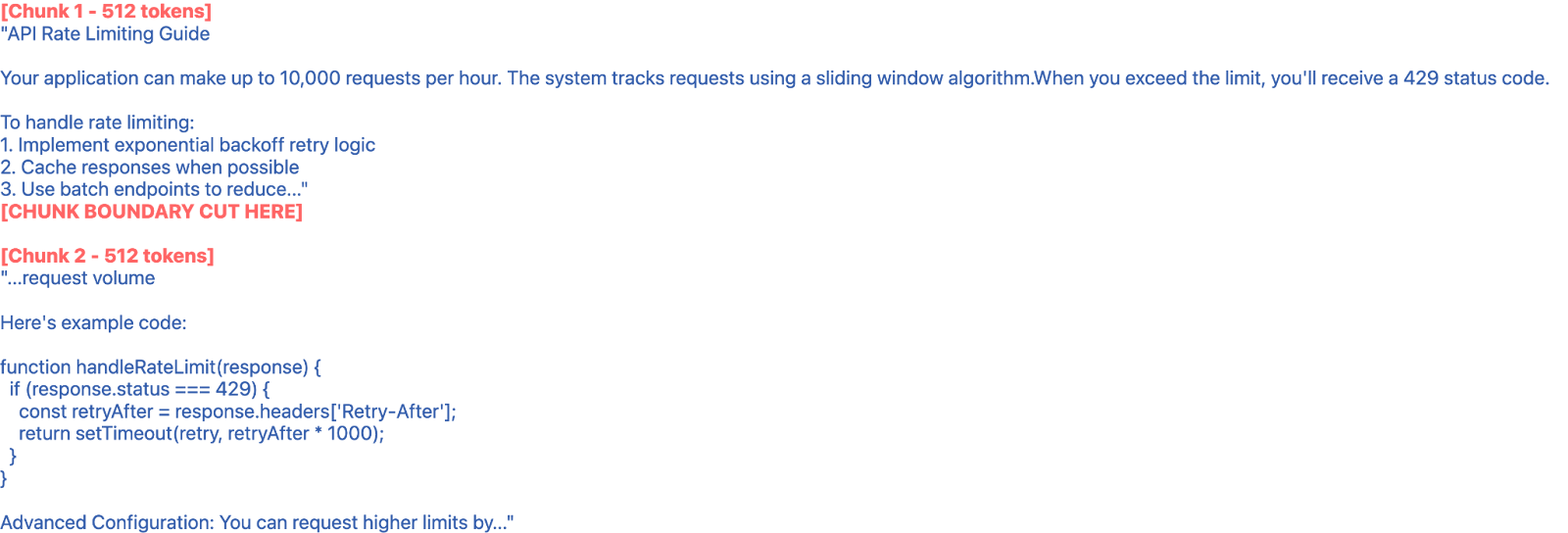

User query: “How do I handle 429 errors when making API calls?”

- Chunk 1 contains the general explanation of 429 errors, but cuts off the detailed solution.

- Chunk 2 contains the practical code example but starts mid-sentence (“…request volume”).

Neither chunk individually contains the complete context for the user query. The code example in Chunk 2 lacks the problem context (429 errors), so embeddings don’t strongly match the query.

Result: The document may score lower than it should or be ranked below less relevant documents.

Issues that emerge at scale:

- Fine-grained semantic chunking requires LLM calls, which increase the cost with document volume and frequency of updates. This also becomes a factor in query latency during inference.

- Going with a fixed chunk size? Then it does not account for the LLM context length. So, overlapping chunks will waste the context tokens.

- Chunking often strips important metadata from the document, such as section hierarchy, tables, and figures. This leads to related chunks becoming disconnected, making it very difficult to reconstruct the document structure unless complex relationship information is kept.

- Different embedding models have different optimal chunk lengths.

Solution:

As one measure does not fit all, adopting a hybrid chunking strategy will solve many of the issues described above. But the solution becomes complex and attracts high maintenance and computing costs.

- Identifying the natural boundaries like paragraphs, sections, code blocks, or tables to preserve the context while chunking. It is complex and needs to maintain rules for different content types.

- Applying variable-length chunking based on content type. This can be done by domain experts and with content knowledge.

- Create overlapping chunks for boundary preservation. This comes with an expense of context tokens.

- Store the relationship between chunks, so that the hybrid reranker process can stitch them together upon retrieval. This step adds latency, but data quality will be better and more reliable.

3. Embedding Generation and Vector Index Management

Generating embeddings for millions of documents and maintaining those indexes at scale introduces significant operational challenges. Embedding models are computationally intensive. Managing the vector databases in high availability mode is also operationally intensive.

Scaling issues:

- Re-embeddings on document updates can quickly become expensive, combined with update frequency. Incremental update and indexing are challenging while maintaining the query performance. In-place replacement or deleting older documents is an expensive operation in any vector database.

- Backup and disaster recovery of vector indexes/databases are non-trivial.

Solution:

- Efficient embedding pipelines: Use batch processing with GPU power. Make use of write-through cache for unchanged content. Maintaining the document change timestamps is crucial.

- Vector indexing: Use approximate nearest neighbor (ANN) indices, not exact search. Plan to rebuild indexes during non-peak load hours or switch between data centers/regions for vector database maintenance.

- Embedding model governance: Lock embedding models in prod. Document and plan a migration plan for model updates. Adopt A/B testing while rolling out new models.

4. Retrieval Quality and Ranking

In most systems with multiple data sources and relational data, vector similarity alone is insufficient for production RAG systems. It could bring in non-relevant documents and impact the context, in turn affecting response quality.

Example retrieval failure:

User query: “How do I configure the authentication module?”

Vector similarity might retrieve:

- Documentation on authentication (good)

- Code file containing “auth” string (bad)

- Security advisory mentioning authentication (irrelevant)

But what is missing?

- Documents from your specific version of software

- Recently modified configuration guide

- Group or Community discussions with similar related questions

- Troubleshooting guides from official websites

Solution:

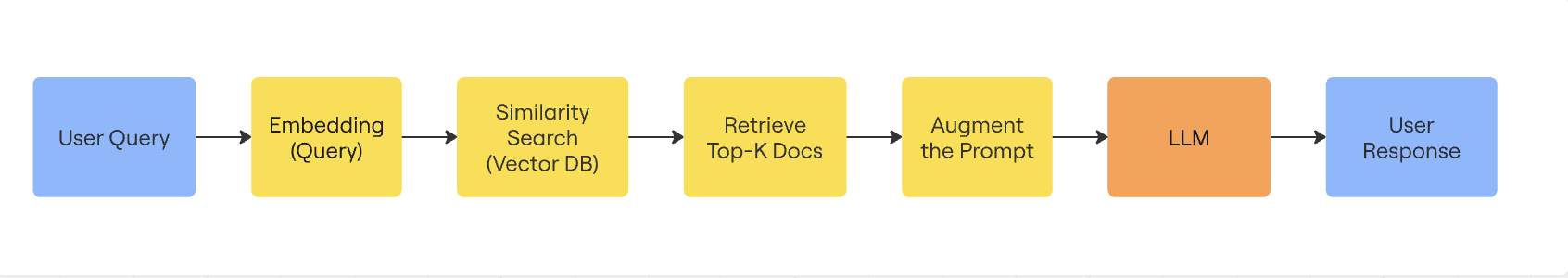

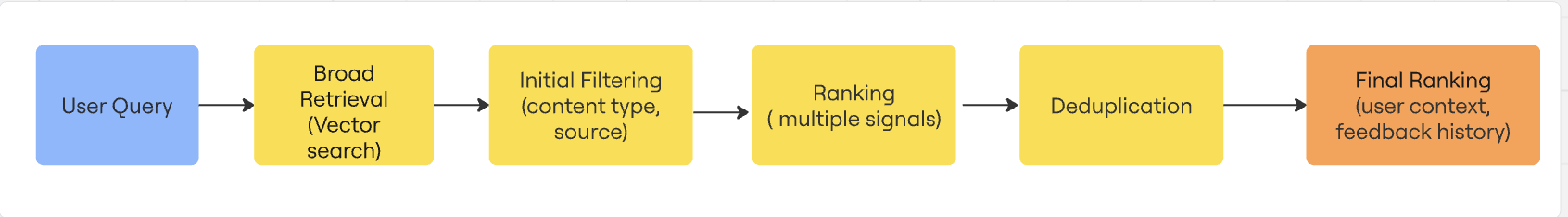

-

Multi-stage retrieval and re-ranking methods will resolve this issue but introduce complexity and latency. The figure below shows the stages involved in multi-stage retrieval:

- Adopt composite ranking signals like vector similarity ( 65%-70% weight), BM25/ keyword matching (10%-15% weigh), document freshness score (5%-10% weight), Document authority score based on source /lineage (5%-10% weight), and user interaction history or feedback (5% weight).

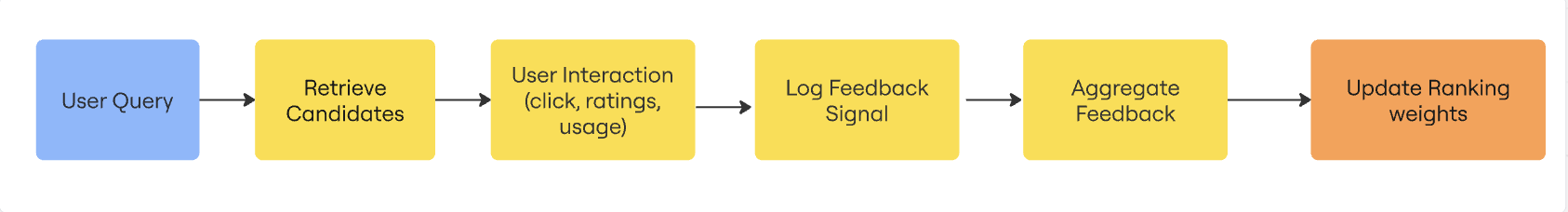

- Establish a user feedback loop to tag irrelevant documents and filter them out in subsequent retrieval. Please note that user feedback is always subjective and should be taken with full context. So, aggregating the user feedback and updating the ranking weights should be done with at most precision and domain knowledge.

5. Quality Monitoring and Observability

RAG systems are extremely difficult to monitor and debug at scale. Traditional ML metrics do not capture the full end-to-end picture of the system due to the many moving parts online.

Monitoring blind spots:

- Document retrieval success is invisible, as the relevance of the document is subjective. You can not know if the best document retrieved was best without human intervention or user feedback.

- LLM hallucinations are often caused by poor document retrieval, so we need to correlate retrieval quality with generation quality.

- Silent failures, such as outdated documents, can lead to issues that may not manifest for weeks or months. This will lead to a decline in user satisfaction.

Solution:

- Establish a comprehensive monitoring dashboard to include retrieval metrics like hit rate, precision@k, MRR, etc.

- Continuously evaluate relevance by sampling N% of queries daily (or at a regular interval based on traffic patterns).

- Create an extensive alerting strategy on drop in hit rate, latency, and user feedback trends.

Track A/B test configurations and results during the production rollouts with model changes.

Conclusion

Building RAG systems that work at scale requires addressing data engineering challenges up front, not as an afterthought. The transition from prototype to production RAG system is not just a scaling exercise. It is an architectural challenge that demands careful thought about data quality, retrieval effectiveness, cost management, and operational reliability. Start with small, measure everything, and scale gradually. In short, focus on evolution, then revolution. Happy building!