The nine-armed octopus and the oddities of the cephalopod nervous system

Nine arms, no problem In 2021, researchers from the Institute of Marine Research in Spain used…

Nine arms, no problem In 2021, researchers from the Institute of Marine Research in Spain used…

The Growing Importance of Platform Teams Platform teams have emerged as a crucial component in modern…

Let’s get something straight: Duolingo is a brilliant app. It’s become a household name in the…

When I first tried to accept payments on my WordPress website, the process was frustrating. Hours…

🚨 Get 20% OFF your entire Tangem order using code CYBERSCRILLA at checkout! Or tap this…

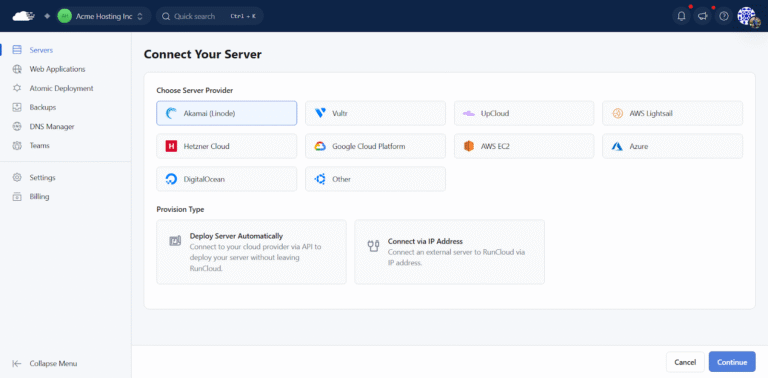

Tired of cPanel’s limits? You’re not alone. If you’ve ever been frustrated by slow performance during…

Since Google released its Veo 3 AI model last week, social media users have been having…

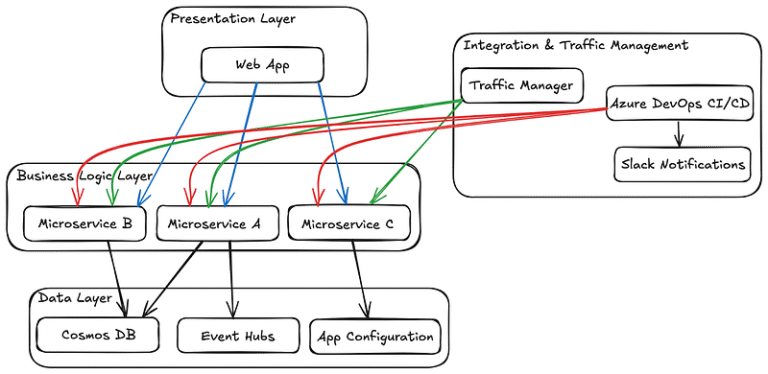

Scaling microservices for holiday peak traffic is crucial to prevent downtime and ensure a seamless user…

May has been a month of big changes in the WordPress ecosystem. From enhanced SEO features…

Something Missing on Your Landing Page? It’s Probably This. You’ve been there. Staring at your landing…

Today, I’m pleased to announce the formation of a new WordPress AI Team, a dedicated group…

In today’s rapidly changing world of online business, it is essential to stay ahead of the…

Are you looking for lightning-speed websites but unable to afford costly hosting plans that don’t fully…

In 1901, divers collecting sponges uncovered a shipwreck that dates to the 1st century BCE off…

The ammonia from the guano does not form the particles but supercharges the process that does,…