You think you know your SDLC like the back of your carpal-tunnel-riddled hand: You’ve got your gates, your reviews, your carefully orchestrated dance of code commits and deployment pipelines.

But here’s a plot twist straight out of your auntie’s favorite daytime soap: there’s an evil twin lurking in your organization (cue the dramatic organ music).

It looks identical to your SDLC — same commits, same repos, the same shiny outputs flowing into production. But this fake-goatee-wearing doppelgänger plays by its own rules, ignoring your security governance and standards.

Welcome to the shadow SDLC — the one your team built with AI when you weren’t looking: It generates code, dependencies, configs, and even tests at machine speed, but without any of your governance, review processes, or security guardrails.

Checkmarx’s August Future of Application Security report, based on a survey of 1,500 CISOs, AppSec managers, and developers worldwide, just pulled back the curtain on this digital twin drama:

- 34% of developers say more than 60% of their code is now AI-generated.

- Only 18% of organizations have policies governing AI use in development.

- 26% of developers admit AI tools are being used without permission.

It’s not just about insecure code sneaking into production, but rather about losing ownership of the very processes you’ve worked to streamline.

Your “evil twin” SDLC comes with:

- Unknown provenance → You can’t always trace where AI-generated code or dependencies came from.

- Inconsistent reliability → AI may generate tests or configs that look fine but fail in production.

- Invisible vulnerabilities → Flaws that never hit a backlog because they bypass reviews entirely.

This isn’t a story about AI being “bad”, but about AI moving faster than your controls — and the risk that your SDLC’s evil twin becomes the one in charge.

The rest of this article is about how to prevent that. Specifically:

- How the shadow SDLC forms (and why it’s more than just code)

- The unique risks it introduces to security, reliability, and governance

- What you can do today to take back ownership — without slowing down your team

How the Evil Twin SDLC Emerges

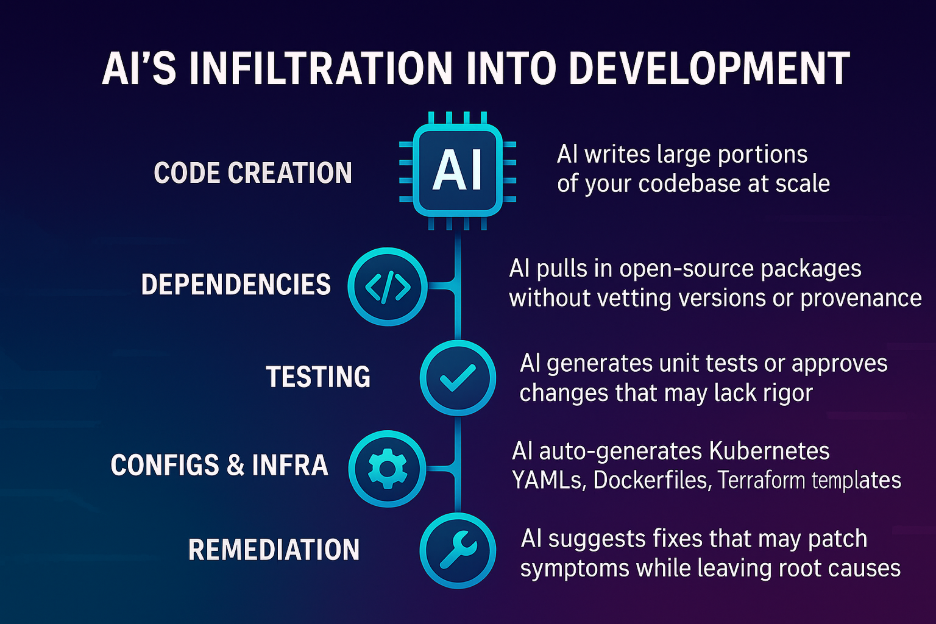

The evil twin isn’t malicious by design — it’s a byproduct of AI’s infiltration into nearly every stage of development:

- Code creation – AI writes large portions of your codebase at scale.

- Dependencies – AI pulls in open-source packages without vetting versions or provenance.

- Testing – AI generates unit tests or approves changes that may lack rigor.

- Configs and infra – AI auto-generates Kubernetes YAMLs, Dockerfiles, Terraform templates.

- Remediation – AI suggests fixes that may patch symptoms while leaving root causes.

The result is a pipeline that resembles your own — but lacks the data integrity, reliability, and governance you’ve spent years building.

Sure, It’s a Problem. But Is It Really That Bad?

You love the velocity that AI provides, but this parallel SDLC compounds risk by its very nature. Unlike human-created debt, AI can replicate insecure patterns across dozens of repos in hours.

And the stats from the FOA report speak for themselves:

- 81% of orgs knowingly ship vulnerable code — often to meet deadlines.

- 33% of developers admit they “hope vulnerabilities won’t be discovered” before release.

- 98% of organizations experienced at least one breach from vulnerable code in the past year — up from 91% in 2024 and 78% in 2023.

- The share of orgs reporting 4+ breaches jumped from 16% in 2024 to 27% in 2025.

That surge isn’t random. It correlates with the explosive rise of AI use in development. As more teams hand over larger portions of code creation to AI without governance, the result is clear: risk is scaling at machine speed, too.

Taking Back Control From the Evil Twin

You can’t stop AI from reshaping your SDLC. But you can stop it from running rogue. Here’s how:

1. Establish Robust Governance for AI in Development

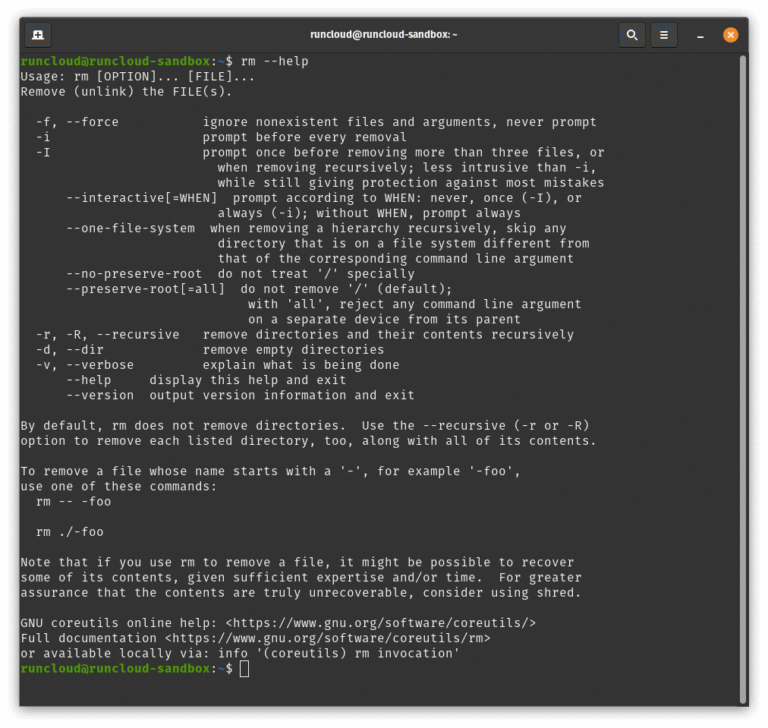

- Whitelist approved AI tools with built-in scanning and keep a lightweight approval workflow so devs don’t default to Shadow AI.

- Enforce provenance standards like SLSA or SBOMs for AI-generated code.

- Audit usage & tag AI contributions — use CodeQL to detect AI-generated code patterns and require devs to mark AI commits for transparency. This builds reliability and integrity into the audit trail.

2. Strengthen Supply Chain Oversight

AI assistants are now pulling in OSS dependencies you didn’t choose — sometimes outdated, sometimes insecure, sometimes flat-out malicious.

While your team already uses hygiene tools like Dependabot or Renovate, they’re only table stakes that don’t provide governance.

They won’t tell you if AI just pulled in a transitive package with a critical vulnerability, or if your dependency chain is riddled with license risks.

That’s why modern SCA is essential in the AI era. It goes beyond auto-bumping versions to:

- Generate SBOMs for visibility into everything AI adds to your repos.

- Analyze transitive dependencies several layers deep.

- Provide exploitable-path analysis so you prioritize what’s actually risky.

Auto-updaters are hygiene. SCA is resilience.

3. Measure and Manage Debt Velocity

- Track debt velocity — measure how fast vulnerabilities are introduced and fixed across repos.

- Set sprint-based SLAs — if issues linger, AI will replicate them across projects before you’ve logged the ticket.

- Flag AI-generated commits for extra review to stop insecure patterns from multiplying.

- Adopt Agentic AI AppSec Assistants — The FOA report highlights that traditional remediation cycles can’t keep pace with machine-speed risk, making autonomous prevention and real-time remediation a necessity, not a luxury.

4. Foster a Culture of Reliable AI Use

- Train on AI risks like data poisoning and prompt injection.

- Make secure AI adoption part of the “definition of done.” Align incentives with delivery, not just speed.

- Create a reliable feedback loop — encourage devs to challenge governance rules that hurt productivity. Collaboration beats resistance.

5. Build Resilience for Legacy Systems

Legacy apps are where your evil twin SDLC hides best. With years of accumulated debt and brittle architectures, AI-generated code can slip in undetected.

These systems were built when cyber threats were far less sophisticated, lacking modern security features like multi-factor authentication, advanced encryption, and proper access controls.

When AI is bolted onto these antiquated platforms, it doesn’t just inherit the existing vulnerabilities, but can rapidly propagate insecure patterns across interconnected systems that were never designed to handle AI-generated code.

The result is a cascade effect where a single compromised AI interaction can spread through poorly-secured legacy infrastructure faster than your security team can detect it.

Here’s what’s often missed:

- Manual before automatic: Running full automation on legacy repos without a baseline can drown teams in false positives and noise. Start with manual SBOMs on the most critical apps to establish trust and accuracy, then scale automation.

- Triage by risk, not by age: Not every legacy system deserves equal attention. Prioritize repos with heavy AI use, repeated vulnerability patterns, or high business impact.

- Hybrid skills are mandatory: Devs need to learn how to validate AI-generated changes in legacy contexts, because AI doesn’t “understand” old frameworks. A dependency bump that looks harmless in 2025 might silently break a 2012-era API.

Conclusion: Bring the ‘Evil Twin’ Back into the Family

The “evil twin” of your SDLC isn’t going away. It’s already here, writing code, pulling dependencies, and shaping workflows.

The question is whether you’ll treat it as an uncontrolled shadow pipeline — or bring it under the same governance and accountability as your human-led one.

Because in today’s environment, you don’t just own the SDLC you designed. You also own the one AI is building — whether you control it or not.

Interested to learn more about SDLC challenges in 2025 and beyond? More stats and insights are available in the Future of Appsec report mentioned above.

![How to Create a Public Status Page for Your Website [FREE]](https://wiredgorilla.com.au/wp-content/uploads/2025/10/how-to-create-a-public-status-page-for-your-website-free-768x400.png)