Designers Are Being Exploited—Here’s How We’re Getting Screwed (And How To Fight Back)

If you’re a designer, you’ve probably felt it by now: the creeping, soul-crushing pressure of platforms…

If you’re a designer, you’ve probably felt it by now: the creeping, soul-crushing pressure of platforms…

WordPress Campus Connect, initially launched in October 2024 as a pilot program, has now been formally…

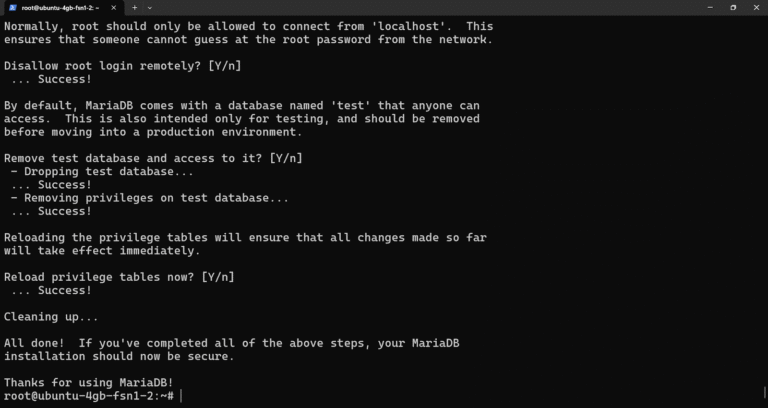

Most Ubuntu servers can handle far more than a single website. Hosting multiple sites on the…

Schlenker-Goodrich, of the Western Environmental Law Center (WELC), is concerned about the administration’s efforts to isolate…

Success in today’s complex, engineering-led enterprise organizations, where autonomy and scalability are paramount, hinges on more…

Off-screen menus, often represented in their most common form as the hamburger menu, have become a…

Last year, I saw someone miss out on their dream domain name because they didn’t know…

WordPress 6.8.1 is now available! This minor release includes fixes for 15 bugs throughout Core and…

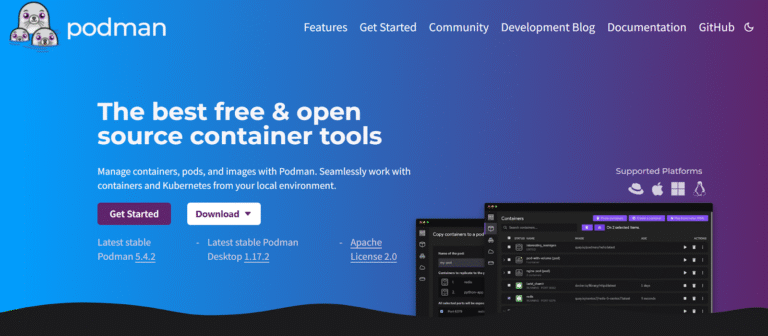

Docker has changed software development and application deployment through containerization. Its intuitive command-line interface, tools such…

I began using Build Tools a few years ago, even before it became open-source. Today, it…

To be sure, Patrick Stewart’s regal delivery in the early game helps paper over a lot…

A continuation represents the control state of computation at a given point during evaluation. Delimited Continuations…

“How do I make this page private for members only?” That’s one of the most common…

🚨 Here are 6 of the best cold wallets on the market: https://www.youtube.com/watch?v=SopLHiaLRdE Chapters:0:00 – XRP…

This group are called Tech Harbour Services and they’re located on New Garden Town in Lahore,…